AI-Driven Storytelling with Multi-Agent LLMs - Part II

Second part of the summaries of ongoing research with LLMs at the University of Havana

In the previous article, we explored how multi-agent architectures can inject life and autonomy into AI-generated stories, allowing characters to pursue their own goals in a dynamic world. But what happens when you add a human to the mix? Interactive storytelling raises the stakes: now the system must not only maintain coherence and character consistency, but also respond—intelligently and flexibly—to unpredictable user input.

This is a much harder problem. The system must walk a tightrope: it needs to be consistent enough to avoid plot holes and character drift, but flexible enough to let the user meaningfully shape the narrative, even in ways the system never anticipated. In this second article I bring you our second undergraduate thesis at the University of Havana, taking on this challenge head-on.

If you haven’t read Part I of this series, I recommend starting there for the full context and motivation behind our research line. In short: we’re not trying to “solve” storytelling, but to use it as a demanding testbed for developing robust techniques in LLM governance, safety, and control.

The Core Problem

Let’s get to the heart of the matter: Why is interactive storytelling so hard? The answer lies in a fundamental tension—one that anyone who’s ever played a narrative game or written a choose-your-own-adventure story will recognize. It’s the struggle between agency (the user’s freedom to make meaningful choices) and control (the system’s responsibility to keep the story coherent, engaging, and believable).

Agency is what makes interactive storytelling magical. It’s the feeling that your decisions actually matter—that you can steer the story in unexpected directions or even break the mold of the narrative world. In a perfect system, you’d be able to do anything your imagination conjures: befriend the villain, burn down the tavern, or turn the hero into a poet. The system would adapt, improvise, and keep the experience compelling.

But here’s the rub: pure agency, without constraints, is a recipe for chaos. If the system simply accepts every user input at face value, the story can quickly unravel. Characters might act out of character, plotlines can contradict themselves, and the narrative world loses its internal logic. The result? A story that feels less like a crafted experience and more like a series of disconnected improv skits.

On the flip side, control is the system’s way of protecting the integrity of the story. Think of it as the invisible hand of the “narrative director”—the set of rules, memory, and logic that ensures events make sense, characters stay true to themselves, and the world remains believable. Control is what prevents the protagonist from suddenly teleporting to Mars or resurrecting a character who just died (unless, of course, the story’s logic allows for it).

But too much control, and the story becomes a railroad. The user’s choices are ignored, overwritten, or reduced to cosmetic differences. The narrative might be coherent, but it’s no longer interactive in any meaningful sense. The magic of agency is lost.

Our Proposal

Let’s open the hood on this architecture. The core idea is straightforward but powerful: break down the complex process of interactive storytelling into a series of specialized agents, each responsible for a distinct narrative function, and orchestrate their collaboration through a well-defined workflow. This modular approach is what allows the system to achieve both flexibility (adapting to user decisions) and consistency (maintaining narrative logic and emotional coherence).

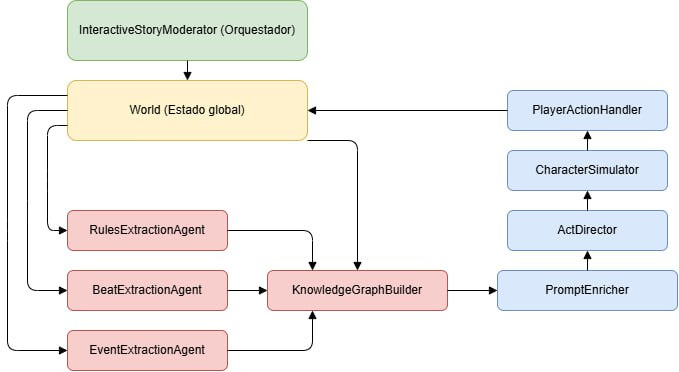

We defined the following agents:

Orchestrator of Interactive Stories: Acts as the central coordinator. This agent triggers each phase in the correct sequence, manages the overall flow, and ensures all updates to the knowledge graph and narrative state happen in the right order. Without this conductor, the “orchestra” of agents would quickly fall out of sync.

Rule Extraction Agent: Extracts the fundamental rules and constraints of the story world (e.g., “magic is forbidden,” “time flows forward”). This agent sets the boundaries for all subsequent events, ensuring that the narrative remains internally consistent.

Key Beat Extraction Agent: Identifies the pivotal moments or “beats” from the user’s synopsis or the evolving story. These serve as narrative milestones, guiding the rhythm and progression of the plot.

Event Extraction Agent: Detects and classifies concrete narrative events, assigning actors and consequences. This agent feeds the knowledge graph with actionable story content.

Knowledge Graph Builder: Maintains a dynamic, structured representation of all entities, relationships, rules, and events. The graph acts as both memory and source of truth, ensuring that the story doesn’t lose track of details or contradict itself as it evolves.

Prompt Enricher: Gathers the most relevant context from the knowledge graph and narrative history, then constructs a rich prompt for the language model. This ensures that every new scene is generated with full awareness of what’s come before.

Act Director: Generates the next scene using the enriched prompt. This agent is responsible for advancing the plot while respecting the current state and constraints of the story world.

Character Simulator: Simulates the emotional and behavioral responses of characters to the latest events. This keeps character arcs believable and emotionally consistent.

Player Action Handler: Integrates the user’s decisions into the narrative. It updates the knowledge graph and narrative history, ensuring that user choices have real, lasting impact on the unfolding story.

Here is a brief overview of how all these agents collaborate in a typical interactive session.

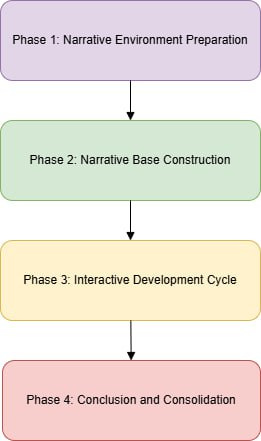

Preparation Phase

The Orchestrator initializes an empty knowledge graph and sets up the narrative environment.

The Rule Extraction Agent processes the initial synopsis or world description to establish the core rules.

All agents are initialized and ready to process input as the story unfolds.

Narrative Base Construction

The Key Beat Extraction Agent identifies the main plot points from the user’s synopsis.

The Event Extraction Agent breaks down the synopsis into actionable events.

The Knowledge Graph Builder integrates these elements into the graph, establishing the initial state of the story world.

Interactive Development Loop

Prompt Enrichment: The Prompt Enricher queries the knowledge graph for the most relevant context (recent events, character states, world rules) and constructs a prompt for the LLM.

Scene Generation: The Act Director uses this prompt to generate the next scene, ensuring continuity and literary quality.

Character Simulation: The Character Simulator infers and documents how each character reacts to the new developments, updating their emotional and behavioral states.

User Interaction: The Player Action Handler presents choices to the user, receives their input, and encodes the resulting actions as new events in the knowledge graph.

The cycle repeats, with each agent building on the outputs and updates of the others, ensuring that every new scene is both a logical continuation and a meaningful response to user agency.

Conclusion and Consolidation

When the user signals the end of the story, the Orchestrator triggers the finalization phase.

The Knowledge Graph Builder ensures all narrative threads are resolved and the story is logically complete.

The system outputs the full, coherent narrative, along with a structured map of how the user’s choices shaped the journey.

How does this stack up?

Let’s get honest: it’s easy to make grand claims about “better stories” and “more engaging AI,” but how do you actually measure narrative quality in a rigorous, meaningful way? For this thesis, the evaluation was designed to pit the multi-agent system head-to-head against a baseline approach that used the same underlying LLM (Llama-3-8B-Instruct) but without any agentic orchestration. This setup ensured that any improvement could be attributed squarely to the architecture—not to a bigger model, more data, or secret sauce under the hood.

The Metrics

The evaluation used a mix of qualitative and structured criteria, focusing on the aspects that matter most for interactive storytelling. Here’s the breakdown:

Narrative Coherence: Does the story flow logically from scene to scene, or do we get abrupt jumps and plot holes?

Protagonist Agency: Do the user’s choices and the protagonist’s actions actually shape the direction of the story in a meaningful way?

Adaptation to User Input: Does the system integrate user decisions naturally, creating real consequences and new narrative branches?

Conflict and Tension: Is the story able to build suspense, escalate challenges, and avoid flat or artificial drama?

Originality and Creativity: Are the story elements fresh and surprising, or do we just get recycled tropes?

Clarity and Literary Style: Is the writing evocative, immersive, and stylistically rich?

Thematic Consistency: Are the core themes and motifs reinforced throughout, or do they get lost along the way?

Emotional Consistency: Do the characters’ emotions evolve logically and believably?

Context Maintenance: Does the system remember important details, settings, and relationships as the story unfolds, or does it “forget” as it goes?

Each experiment involved presenting both systems with the same narrative synopsis and a sequence of user choices. Human evaluators then compared the resulting stories using these criteria, looking for both strengths and weaknesses in each approach.

The Findings

The results were clear and consistent across multiple story scenarios. The multi-agent system outperformed the baseline on almost every metric that matters for interactive storytelling.

Stories generated by the multi-agent system maintained a logical chain of cause and effect, with smooth transitions between scenes and a strong sense of narrative momentum. The protagonist’s decisions had real weight, steering the story into new territory and producing consequences that felt both meaningful and surprising. User choices were not just tacked on—they shaped the evolution of the plot, the emergence of conflict, and the protagonist’s emotional journey.

Perhaps most striking, the multi-agent system produced narratives with richer literary style and more vivid descriptions, even though it used the same LLM as the baseline. This suggests that the way you structure and feed context to the model—through agents that carefully curate, update, and enrich the narrative state—can unlock much more of the LLM’s creative potential.

The system also excelled at maintaining context and thematic unity. Key details, settings, and character motivations were carried forward across scenes, avoiding the notorious “memory loss” problem of vanilla LLMs. Emotional arcs were more believable, and the stories avoided the repetitive, cliché-driven traps that often plague automated narrative generation.

By contrast, the baseline system struggled with abrupt transitions, shallow integration of user choices, and a tendency toward flat or generic storytelling. Protagonist agency was often illusory—choices rarely changed the story in a meaningful way. The writing, while functional, lacked the immersive quality and emotional resonance achieved by the multi-agent approach. Context was easily lost, leading to inconsistencies and a less engaging experience overall.

Final Thoughts

This thesis, and all our complementary research, proves you don’t need to fine-tune, retrain, or scale up your language model to get dramatically better results. By layering a clever multi-agent system on top of a standard LLM, you unlock coherence, adaptability, and user alignment that brute-force training simply can’t deliver. The architecture is the key.

Why does this matter? Because the core problems of interactive storytelling—long-term memory, integrating unpredictable user input, keeping characters and worlds believable—are exactly the challenges we face in building safe, controllable AI everywhere. The multi-agent architecture acts as a governance layer, orchestrating the model’s raw power through explicit rules, dynamic memory, and transparent workflows. It’s not just about telling better stories; it’s about showing that smart design can outpace brute force.

If we want AI that’s powerful and safe, we shouldn’t just throw more data or compute at the problem. We should design smarter systems—ones that let us steer, audit, and trust their outputs.

If you want to check the generated stories and read the full thesis (in Spanish), check this GitHub repository.

This is so fascinating! I think it does have huge implications for the future development of AI in general, but I'm particularly interested in the specific case of storytelling. As a writer, I'm so anxious to find out what AI is really capable of, writing-wise. I think there's no question that AI will be capable of generating okay, reasonably satisfying stories (with all sorts of potential for things like gigantic, immersive worlds and personalized storytelling which are beyond the capabilities of a human writer), but will AI ever be able to create GREAT stories? Or is there, finally, a human element that is indispensable and irreplaceable -- something along the lines of true originality or genuine emotional depth? And will AI help a great writer become even greater, or will it just dilute his genius? We may want to believe this or that, but I don't think anyone really knows the answers to these questions. Not yet.

Very interesting! Thanks for the info, kudos!