Why reliable AI requires a paradigm shift

Hallucinations are the fundamental barrier for the widespread use of AI, and they won't be solved anytime soon.

In the context of AI systems, hallucinations refer to the phenomenon where an AI model generates outputs that appear plausible and coherent but do not accurately reflect real-world facts or the system's intended purpose. These hallucinations can manifest in various forms, such as fabricated information, nonsensical responses, or outputs that are inconsistent with the input data. Hallucinations can occur in various AI applications, from natural language processing to computer vision and beyond.

Addressing the issue of hallucinations in AI is crucial for ensuring the reliable and trustworthy deployment of these systems. Hallucinations can lead to erroneous decision-making, false conclusions, and potentially harmful outcomes, especially in critical applications such as healthcare, finance, and public safety, just to mention a few. By understanding the causes and mechanisms behind hallucinations, researchers and developers can work to mitigate these issues, improving the overall robustness and reliability of AI systems.

In this issue, I want to explore the nature and impact of hallucinations in current generative AI models, explicitly focusing on language models. However, I believe these insights can be extrapolated to other domains where generative models are used.

The central thesis of this article is that although hallucinations can be reduced or mitigated with a variety of practical approaches, the core issue is a fundamental flaw in the assumptions about the nature of language and truth that are intrinsic to the prevalent language modeling paradigms used today. If this thesis is correct, we won't be able to solve AI hallucinations entirely with incremental improvements to current tech; we will need a new machine learning paradigm altogether.

What are Hallucinations in AI?

The term "hallucination" in the context of AI refers to the phenomenon where a large language model (LLM) or other generative AI system produces outputs that appear plausible and coherent but do not accurately reflect reality or the system's intended purpose. These hallucinations manifest as the generation of false, inaccurate, or nonsensical information that the AI presents with confidence as if it were factual.

First, a caveat. Unlike human hallucinations, which involve perceiving things that are not real, AI hallucinations are associated with the model producing unjustified responses or beliefs rather than perceptual experiences. The name "hallucination" is, therefore, imperfect, and it often leads to mistakes as people tend to anthropomorphize these models and make erroneous assumptions about how they work and the causes of these failures. However, we will stick to this name in this article because it is the prevalent nomenclature used everywhere people talk about AI. Just keep in mind we're talking about something completely different from what the term "hallucination" means in general.

Before diving into the why of AI hallucinations, let's distinguish them from other typical failures of generative models, such as out-of-distribution errors or biased outputs.

Out-of-distribution errors occur when an AI model is presented with input data significantly different from its training data, causing it to produce unpredictable or nonsensical outputs. In these cases, the model's limitations are clear, and it is evident that the input is outside of its capabilities. This is just a generalization error that usually points to either 1) the model's hypothesis space is too constrained to entirely capture the actual distribution of data, or 2) the available data or training regimes are insufficient to pinpoint a general enough hypothesis.

Hallucinations are more insidious than out-of-distribution errors because they happen within the input distribution, where the model is supposedly well-behaved. Even worse, due to the stochastic nature of generative models, hallucinations tend to happen entirely randomly. This means that for the same input, the model can hallucinate once out of 100 times, making it almost impossible to evaluate and debug.

Biased outputs, on the other hand, arise when an AI model's training data or algorithms contain inherent biases, leading to the generation of outputs that reflect those biases, such as stereotypes or prejudices. These are often not hallucinations but realistic reproductions of human biases that pervade our societies: The model produces something that reflects the reality underlying its training data. It's just that such a reality is an ugly one. Dealing with biases in AI is one of the most critical challenges in making AI safe, but it is an entirely different problem that we can tackle in a future issue.

Hallucinations, in contrast, involve the AI model generating information that is not necessarily biased but completely fabricated or detached from reality. This makes detecting them far more difficult because the model's responses appear confident and coherent, and there is no obvious telltale that helps human evaluators quickly identify them.

Real-World Implications of AI Hallucinations

The occurrence of hallucinations in AI systems, particularly in large language models (LLMs), can have significant consequences, especially in high-stakes applications such as healthcare, finance, or public safety. For example, a healthcare AI model incorrectly identifying a malignant skin lesion as benign can doom a patient. On the other hand, identifying a benign skin lesion as malignant could lead to unnecessary medical interventions, also causing harm to the patient. Similarly, in the financial sector, hallucinated outputs from an AI system could result in poor investment decisions with potentially devastating economic impacts.

However, even in low-stakes applications, the insidious nature of hallucinations makes them a fundamental barrier to the widespread adoption of AI. For example, imagine you're using an LLM to generate summaries from audio transcripts of a meeting, extracting relevant talking points and actionable items. Suppose the model tends to hallucinate occasionally, either failing to extract one key item or producing a spurious item. In that case, it will be virtually impossible for anyone to detect that without manually revising the transcript, thus rendering the whole application of AI in this domain useless.

For this reason, one of the critical challenges in addressing the real-world implications of language model hallucinations is the difficulty in effectively communicating the limitations of these systems to end-users. LLMs are trained to produce fluent, coherent outputs that appear plausible, even when factually incorrect. If the end-users of an AI system are not sufficiently informed to review the system's output with a critical eye, they may never spot any hallucinations. This leads to a chain of mistakes as the errors from the AI system propagate upstream through the layers of decision-makers in an organization. Ultimately, you could be making a terrible decision that seems entirely plausible given all the available information because the source of the error—an AI hallucination—is impossible to detect.

Thus, developing and deploying LLMs with hallucination capabilities raises critical ethical considerations. There is a need for responsible AI development practices that prioritize transparency, accountability, and the mitigation of potential harms. This includes establishing clear guidelines for testing and validating LLMs before real-world use and implementing robust monitoring and oversight mechanisms to identify and address hallucinations as they arise.

Crucially, there are absolutely zero generative AI systems today that can guarantee they don't hallucinate. This tech is simply unreliable in fundamental ways, so every actor in this domain, from developers to users, must be aware there will be hallucinations in your system, and you must have guardrails in place to deal with the output of unreliable AIs. And this is so perverse because we are used to software just working. Whenever software doesn't do what it should, that's a bug. However, hallucinations are not a bug of AI, at least in the current paradigm. As we will see in the next section, they are an inherent feature of how generative models work.

Why do Hallucinations Happen?

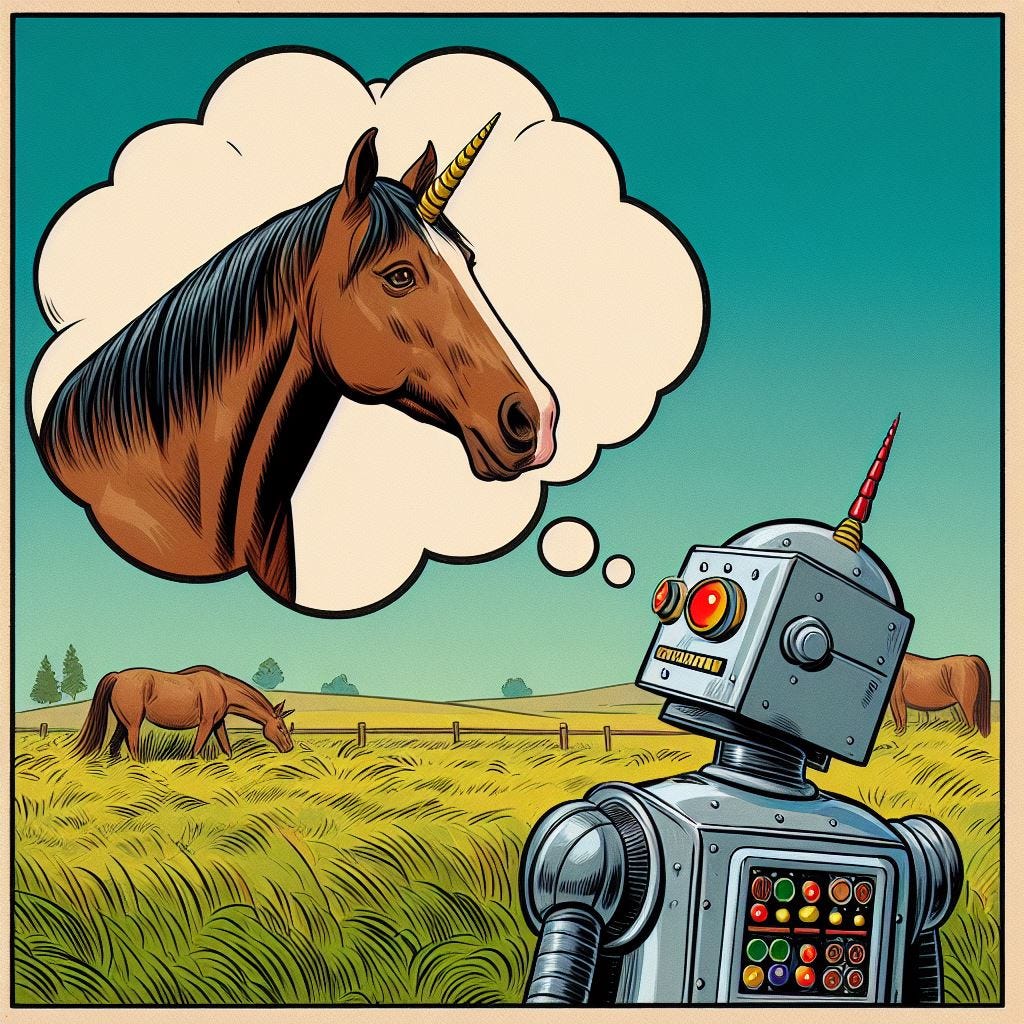

There are many superficial reasons for hallucinations, from data and modeling problems to issues with prompting. However, the underlying cause of all hallucinations, at least in large language models, is that the current language modeling paradigm used in these systems is, by design, a hallucination machine. Let's unpack that.

Generative AI models, including LLMs, rely on capturing statistical patterns in their training data to generate outputs. Rather than storing explicit factual claims, LLMs implicitly encode information as statistical correlations between words and phrases. This means the models do not have a clear, well-defined understanding of what is true or false. They can just generate plausibly sounding text.

The reason this mainly works is that generating plausibly sounding text has a high probability of reproducing something that is true, provided you are trained on mostly truthful data. However, large language models (LLMs) are trained on vast corpora of text data from the internet, which contains inaccuracies, biases, and even fabricated information. So, these models have "seen" many true sentences and thus picked up correlations between words that tend to generate true sentences. Still, they've also seen many variants of the same sentences that are slightly or even entirely wrong.

So, one of the primary reasons for the occurrence of hallucinations is the lack of grounding in authoritative knowledge sources. Without a strong foundation in verified, factual knowledge, the models struggle to distinguish truth from falsehood, generating hallucinated outputs. But this is far from the only problem. Even if you only train on factual information—assuming there would be enough of such high-quality data to begin with—the statistical nature of language models makes them susceptible to hallucination.

Suppose your model has only seen truthful sentences and learned the correlations between words in these sentences. Imagine there are two very similar sentences, both factually true, that differ in just a couple of words—maybe a date and a name, for example, "Person A was born in year X" and "Person B was born in year Y". Given the way these models work, the probability of generating a mixed-up sentence like "Person B was born in year X" is only slightly smaller than generating either of the original sentences.

What's going on here is that the statistical model implicitly assumes that small changes in the input (the sequence of words) lead to small changes in the output (the probability of generating a sentence). In more technical terms, the statistical model assumes a smooth distribution, which is necessary because the size of the data the model needs to encode is orders of magnitude larger than the memory (i.e., the number of parameters) in the model. Thus, the models must compress the training corpus, and compression implies losing some information.

In other words, statistical language models inherently assume that sentences that are very similar to what they have seen in the training data are also plausible. They encode a smooth representation of language, and that's fine as long as you don't equate plausible with factual. See, these models weren't designed with factuality in mind. They were initially designed for tasks like translation, where plausibility and coherence are all that matter. It's only when you turn them into answering machines that you run into a problem.

The problem is there is nothing smooth about facts. A sentence is either factual or not; there are no degrees of truthfulness—for the most part, let's not get dragged into epistemological discussions here. But LLMs cannot, by design, define a crisp frontier between true and false sentences. All the frontiers are fuzzy, so there is no clear cutoff point where you can say that if a sentence has less than X perplexity value, it is false. And even if you could define such a threshold, it would be different for all sentences.

You may ask why we can't avoid using this smooth representation altogether. The reason is that you want to generate sentences that are not in the training set. This means you must somehow guess that some sentences you have never seen are also plausible, and guessing means you must make some assumptions. The smoothness hypothesis is very reasonable—and computationally convenient, as these models are trained with gradient descent, which requires smoothness in the loss function—again, as long as you don't care about factuality. If you don't compress the training data in this smooth, lossy way, you will simply not be able to generate novel sentences at all.

In summary, this is why the current paradigm of generative AI will always hallucinate, no matter how good your data is and how elaborate your training procedures or guardrails are. The statistical language modeling paradigm, at its core, is a hallucination machine. It concocts plausibly-sounding sentences by mixing and matching words seen together in similar contexts in the training set. It has no inherent notion of whether a given sentence is true or false. All it can tell is that it looks like sentences that appear in the training set.

Now, a silver lining could be that even if some false sentences are unavoidably generated, we can train the system to minimize their occurrence by showing it lots and lots of high-quality data. Can we push the probability of a hallucination to a sufficiently low value that, in practice, almost never happens? Unfortunately, no. Recent research suggests that if there is a sentence that can be generated at all, no matter how low its base probability, then there is a prompt that will generate it with almost 100% certainty. This means that if we introduce malicious actors into our equation, we can never be sure our system can't be jailbroken.

Mitigating Hallucinations in AI

So far, we've argued that hallucinations are inherently impossible to eliminate completely. But this doesn't mean we can't do anything about it in practice. I want to end this article with a summary of mitigation approaches used today by researchers and developers.

One key strategy is incorporating external knowledge bases and fact-checking systems into the AI models. The risk of generating fabricated or inaccurate outputs can be reduced by grounding the models in authoritative, verified information sources.

Researchers are also exploring ways to develop more robust model architectures and training paradigms less susceptible to hallucinations. This may involve increasing model complexity, incorporating explicit reasoning capabilities, or using specialized training data and loss functions.

Enhancing the transparency and interpretability of AI models is also crucial for addressing hallucinations. By making the models' decision-making processes more transparent, it becomes easier to identify and rectify the underlying causes of hallucinations.

Alongside these technical approaches, developing standardized benchmarks and test sets for hallucination assessment is crucial. This will enable researchers and developers to quantify the prevalence and severity of hallucinations and compare the performance of different models. Thus, if you can't completely eliminate the problem, at least you can quantify it and make informed decisions about where and when it is safe enough to deploy a generative model.

Finally, addressing the challenge of hallucinations in AI requires an interdisciplinary approach involving collaboration between AI researchers, domain experts, and authorities in fields like scientific reasoning, legal argumentation, and other relevant disciplines. By fostering cross-disciplinary knowledge sharing and research, the understanding and mitigation of hallucinations can be further advanced.

Conclusion

The issue of hallucinations in AI systems, particularly in large language models, poses a significant challenge to the reliable and trustworthy deployment of these powerful technologies. Hallucinations, where AI models generate plausible-sounding but factually inaccurate outputs, can have severe consequences in high-stakes applications and undermine user trust.

The underlying causes of hallucinations stem from the fundamental limitations of current language modeling approaches, including the lack of grounding in authoritative knowledge sources, the reliance on statistical patterns in training data, and the inherent difficulty in reliably distinguishing truth from falsehood using statistics alone. These models were designed to generate plausible output similar to but not exactly the same as the training data, which, by definition, requires making up stuff.

These limitations highlight the need for more advanced techniques to better understand the nuances of language and factual claims, probably involving some fundamental paradigm shifts in machine learning that take us beyond what pure statistical models can achieve.

Mitigating hallucinations in practice requires a multifaceted approach involving incorporating external knowledge bases and fact-checking systems, developing more robust model architectures and training paradigms, leveraging human-in-the-loop and interactive learning strategies, and improving model transparency and interpretability. Standardized benchmarks and test sets for hallucination assessment and interdisciplinary collaboration between AI researchers, domain experts, and authorities in related fields will be crucial for advancing the understanding and mitigation of this challenge.

I hope this article has given you some food for thought. We went a bit deep into the technical details of how generative models work, but that is necessary to understand why these issues are so hard to solve. If you like this type of article, please let me know with a comment, and feel free to suggest future topics of interest.

Fantastic description of LLMs. I've always said they were intended to be linguistically accurate, not factually accurate but I haven't thought that the hallucinations are a feature, not a bug.

This article is at least 78% factual!

Thanks for this exploration. You're not the first one to point out that hallucinations aren't "solvable" within the current LLM architecture.

I find it fascinating that AI is kind of caught in this awkward middle of being subpar for any of its potential applications:

If you're using AI for practical, data-grounded purposes, you have to contend with hallucinations and unreliability.

If you want to use it for stuff where facts don't matter, like creative writing, you run into the issue of recycled prose and themes.

I feel like one of the most useful applications in my own life is using LLMs to brainstorm - LLMs are often "creative" enough to nudge me into an interesting direction while being able to output lots of ideas very quickly.