You and AI: the social side of artificial intelligence

Why AI-humans relationships are now a crucial topic

I'm pleased to bring you this guest post by Riccardo Vocca from The Intelligent Friend, a deep dive into the very sensitive yet crucial topic of human-AI relationships. I hope you enjoy this post as much as I have, and that it sparks some interesting thoughts in your brain. It surely has for me.

Please consider subscribing to The Intelligent Friend and support Riccardo. If you do subscribe, please send me a screenshot, and I'll give you a 100% discount coupon to one of my books of your choice. Sounds like a deal?

— Alejandro.

By Riccardo Vocca.

AI surprises us every day. We appreciate its capabilities or we are frightened by it, we try to find the best use for us, and, above all, we experiment. What is the point? It is that in all this time of experimentation and use, what we do is, trivially, interact with a technology. I use the term interact because it is no coincidence that this has a different weight for an entity that we as human beings naturally tend to anthropomorphize, that is, to give human characteristics while knowing that there is nothing but a machine behind it. A machine that, although more and more 'intelligent' than our coffee machine or our washing machine, is still not a conscious entity - unlike what one might sometimes think.

Yet, we build relationships based on interactions with it, just as we do with human beings. This topic has become so important in research - as well as for technologist owners - for several reasons:

First, because it is important to understand what is peculiar or different from human interactions;

To understand what aspects and how they impact on our eventual adoption and use of this technology;

And, finally, because this 'AI' will be (and is being) hugely popular, and it is crucial to understand the implications for human nature.

In this regard, I am really happy today to be able to talk to you about this topic in Alejandro Piad Morffis 's newsletter, one of the publications that I read with the most involvement and with which I immediately interacted since I started writing mine, The Intelligent Friend, focused on humans-AI relationships. My issues are based only on scientific papers and naturally, even today, we will be "helped" by some (three specifically) works.

We’ll try to explore a specific aspect of relationships: how our "social" interactions change depending on whether we are dealing with a human or with AI? First we will try to answer specifically thanks to a paper that was a pioneer of the topic, published five years before (2017) ChatGPT arrived at the beginning of its popularity (2022). Then we will move on to the intelligent virtual agent that has probably accompanied people the most in recent years, Siri, and we will try to understand how we humans perceive collaboration with AI in a very particular context: a game.

Are you ready? So let's dive in!

Who am I chatting with?

As you will immediately understand and/or by reading my newsletter, I like to explain scientific concepts with concrete and impactful examples. So, let's start with one of these.

Let's say you find yourself chatting with a friend of yours on social media. Share jokes, news, opinions. Then you find yourself interacting with a chatbot, to talk about different things. Do you think this changes your level of extroversion, openness? ‘There shouldn't be any difference,' you might be thinking. Well, that's not really the case. The study by Mou & Xu (2017) tried to understand how we show different personality traits depending on whether we interact with a human friend and with a chatbot. Where did the scholars start from?

First, when we talk about human-machine interactions, there are some fundamental paradigms. One of these, also at the basis of this study, is the 'Computers Are Social Actors' paradigm (abbreviated as 'CASA'). It was developed by Nass et al. (1994, 1996) who tried to replicate some results from social science research with computers or televisions. The crucial concept is as intuitive as it is powerful: we treat computers as if they were real people.

Now, this paradigm has seen a huge following in research due to its various applications and its importance (I advise you, if you are interested, to read the dedicated paragraph of the paper, it is really interesting!) which naturally I will not deepen further here. The important thing is to keep in mind the concept underlying the paradigm, because it is also the conceptual basis on which Mou & Xu (2017) based their study. The researchers investigated interactions with chatbots and with humans on the same platform in the context of a social interaction, hypothesizing that people exhibited different levels of certain personality traits and characteristics.

Different subjects, different reactions

For this, one of the first widely used interactive chatbots, Little Ice, developed by Microsoft, and WeChat, where Little Ice was embedded, were chosen. Ten volunteers, just regularly using WeChat, got roped into sharing their chat transcripts, one with a chatbot named Little Ice and the other with a human buddy. They had no clue they were part of a study; these were just their everyday chats.

I would like to point out that these people were asked to select a conversation with a regular friend, not a close friend or significant other. Furthermore, only the first conversations with Little Ice and friends were analyzed to avoid relationship development bias. These volunteers were evaluated by 277 viewers on personality and communication attributes and also filled out self-assessment measures.

The results were very interesting: distinct behavioral patterns were observed when WeChat users interacted with Little Ice compared to human friends. Users exhibited higher levels of openness, agreeableness, extroversion, and conscientiousness when conversing with humans, while showing increased neuroticism with AI.

This suggests a preference for socially desirable traits in human interactions. Furthermore - and this is one of the things that I found most interesting - the exploratory study conducted suggests that users may apply social scripts from human interactions to human-computer interactions, but, it should be underlined, less openness and extroversion.

As also specified by the authors, this was one of the first exploratory studies to try to understand the differences between human interactions and interactions with a chatbot in a social media context. Among other things, at a time (2017) from which ChatGPT and such advanced technologies now appear very far away. Thus, this study, as well as many others, has begun to ask important questions about integrating relationships with a virtual agent or chatbot into our daily lives. How did this relationship evolve?

It's not just a matter of who, but also of what

To analyze a possible answer to this question, I will present the results of a different study, in which the protagonist is a "person" we know well. You talk to her to remember things, to help you while you cook, maybe to call someone. Yes, you understand, I am talking about Siri. "She", together with Alexa, has been the subject of numerous studies on the factors that could impact interactions with consumers. The study by Lee, Kavya and Lasser (2021) is very interesting in my opinion because it is one of those that put a very recurring theme at the center: trust.

If you think about it, trust is a fundamental factor in human relationships. We also think about our friends on the basis of how much we trust them, on the basis of how much we can confide in them. Here, this centrality of trust is also present in human-machine relationships.

In this study, it is defined as "the attitude that an agent will help achieve an individual's goals in a situation characterized by uncertainty and vulnerability” (Hancock et al., 2011). However, the authors also investigate a fundamental component of the relationship: the type of task.

To talk about it, let's stop for a moment.

Think about three things you interact with Siri for on a daily basis. Probably among your choices there will have been information search, scheduling, mathematical calculation. However, you could also have fun conversations with Siri or even look for support.

The former are defined as "functional" tasks, the latter as "social" tasks. The authors' objective was to understand how trust is associated in relationships with Siri with different types of tasks. In reality, the study is very elaborate and explores the interaction of various factors, such as gender, social presence and the actual type of relationships with AI. Nevertheless, since we have seen quite a few things, and we will also see another interesting paper shortly, let's focus on these aspects which are still very interesting (if you want, find the paper here to learn more about the rest).

So we were saying: how is trust in Siri interrelated to a functional vs. social task? To understand this, the scholars measured five dimensions of trust regarding participants' interactions with Siri. On these three, namely perceived reliability, technical competence, perceived understandability, there were significant differences: people have more trust in Siri in these dimensions when dealing with a functional task.

However, there is another interesting theme, constituted the part of trust connected most to the emotional sphere and which includes the remaining two dimensions that I have not mentioned so far: "affective trust".

The other two dimensions of the five, namely "faith" and "personal attachment", more linked to the emotional sphere, indeed did not seem to have seen a significant difference in the social task. From this it could be deduced that, at least in this context, the participants once again perceived the social task as something separate

AI, games and collaboration

Well. We saw a study from a few years ago that tried to explore the differences in how we interact in some conversations with chatbots. We saw the (partial) results of a more recent study which significantly revealed to us that it is not only who we interact with (Siri) which is relevant, but also what we do (functional vs social task) in this interaction. The third paper I chose for this issue that I had the pleasure of writing here on Mostly Harmless Ideas will be very intriguing for several of you. To introduce it, let's think about this question: What is something we have often interacted with a 'computer' for fun, without it actually being a 'physical computer'?

It won't take long for the gamers among the readers to respond.

Naturally, I’m talking about video games! Video games are a very interesting area for AI. Both for the related commercial developments and for the opportunities it offers to study human-machine relationships and interactions. In this paper by IBM researchers, the game is used as a context to measure and evaluate the effects of collaboration between AI and players.

In particular, the scholars tested different dimensions of the perceptions of players (rapport, intelligence, creativity, likeability) who were told that they would collaborate with a human, with AI or without specifying it.

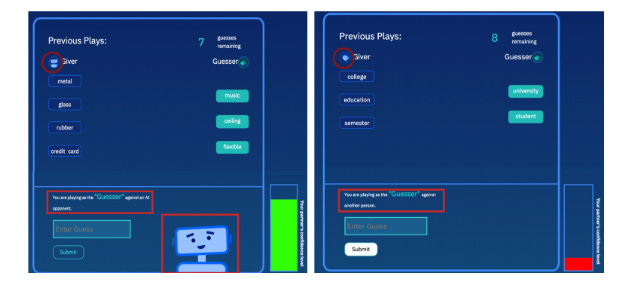

To understand user perceptions of collaborators, the authors created a two-player cooperative game called Wordgame. In Wordgame, one player (the "giver") provides clues to another player (the "guesser") who tries to identify a hidden target word.

The game starts with an AI-provided hint (e.g., "car" for target word "engine"). The guesser has 10 attempts, with each guess following a hint. As a cooperative game, players work together for the guesser's success, fostering openness and honesty towards a shared goal.What were the results? People who believed their partner was human find them more likeable, intelligent, and creative, even if the interactions are identical to those with the AI. This effect is further shown in how people described their partners – using more positive words when they think they were playing with another human and more negative ones when they believed their partner was AI.

A final note

I wrote this issue by briefly including some concepts and results from different papers regarding interactions and relationships between humans and AI for several reasons.

First of all, I wanted to highlight how the research into human-machine interactions and relationships, although it sees fundamental paradigms and theories in constant development, is evolving very quickly and will see increasingly surprising developments.

Speaking of papers relating for example to the period 2022-2024, there is evidence on how people feel "love" for Alexa, on how your partner could be jealous of ChatGPT if used to find his birthday present and on how people may be more or less willing to share sensitive information with a chatbot than a doctor.

Secondly, I wanted to highlight the variety and facets of this topic and, above all, its importance when we often focus a lot on the technical aspects, considering the relational ones, which are of incredible interest, a step backwards.

Third, I chose three studies that, honestly, I thought could intrigue you and give you a first idea of how the topic of relationships can hide a thousand nuances yet to be discovered.

Finally, and this is one of my recurring mantras, I wanted to underline the beauty of research and how many things can be discovered by reading and sharing the results of scientific advances. Sharing knowledge is fundamental, and this is why I like to enhance the work of many researchers by writing about it in my newsletter.

I thank Alejandro again for giving me the opportunity to write this piece for you. If the topic of relationships has interested or intrigued you in the slightest, stop by my newsletter, The Intelligent Friend, and take a look. See you soon!

Thanks Alejandro.

The relevance for teachers in classrooms you uncover here without shining a spotlight on it is awefull:) I feel a powerful human voice in this text I would call existential—I’m not going to looked for a way to file it in a folder to find again during a prompted search. I’m going to respond as another being and change how I look at the world. Many thanks!